Drones are actively used in precision agriculture with one of its major applications of spraying pesticides, herbicides, fertilizers, etc. in the agricultural field. The aim of this project is to develop a reliable sense and avoid technology to make UAV-based spraying completely autonomous. The major challenge in achieving autonomy is the requirement of flying low in an agricultural environment with lots of obstacles like trees, poles, cables, etc. As an engineer at TIH, IIT-Bombay, I worked on the development of a robust obstacle detection and avoidance suite capable of navigating through common obstacles in the agricultural field. The figure below displays the functioning of our sense and avoidance technology. In collaboration with General Aeronautics (GA), Bangalore, we tested our technology on different scenarios. * Trees of different shapes and sizes * Detection and avoidance at different heights, * Detection and avoidance for multiple obstacles. We have successfully tested our implementation in the presence of dust and high winds. Currently, we are working towards modifying the avoidance algorithm to maximize spraying in the marked area in the agricultural field.

Jul 28, 2024

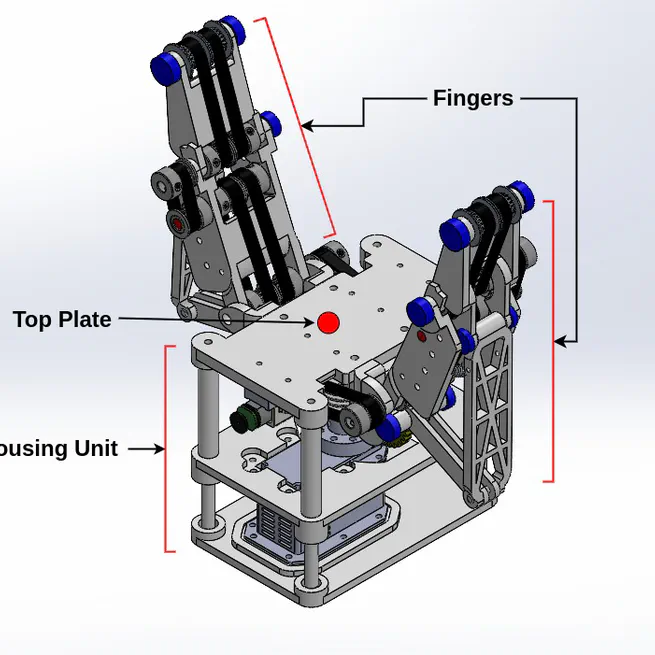

Intelligent Grasping and In-Hand Manipulation (Final Year Project) Overview: The intelligent grasping project successfully addressed grasping location detection for non-standard objects, but further development was required in terms of post-grasp dexterity. The ability for a robot to manipulate objects post-grasp remains a critical challenge in robotic manipulation, a challenge I chose to address for my final year project on In-Hand Manipulation. Design and Implementation: Mechanical Design: We identified that traditional thread-driven underactuated grippers often lacked sufficient grasping force. To resolve this, we developed a four-bar linkage mechanism with an active belt system, enabling enhanced manipulation capabilities. The active belt system was innovatively driven by a single N20 micro motor, controlling both links in a finger, compared to conventional designs that use separate motors for each link. Actuation and Control: The active belt mechanism, powered by a spur and worm drive, was coupled with a Dynamixel servo motor to centralize control over the gripper’s finger movements. This allowed for precise in-hand manipulation of objects with varying geometries. The mechanically underactuated design with an active surface enabled both secure grasping and intricate manipulation. Key Innovations: Single-motor-driven active belt system for compact and efficient control of finger movement. Spur and worm mechanism for centralized control over multiple finger segments, reducing mechanical complexity while maintaining high dexterity. Integration of a Dynamixel servo for robust control, allowing for smooth and adaptive manipulation of different objects.

Feb 28, 2024

Created a maze solving bot in ROS-Gazebo, which implemented DFS algorithm for solving the maze.

Dec 26, 2021

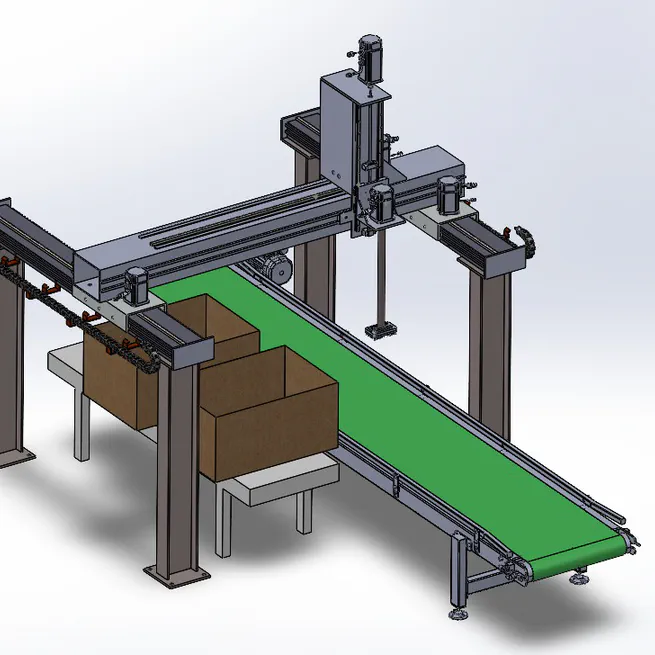

Delta Electronics Automation Contest (March 2021) Project: Gantry-Based System for Efficient Segregation and Packaging In March 2021,i was part of a team participated in the Delta Electronics Automation Contest, where we submitted a proposal to address the challenge of efficiently segregating and packaging different-sized boxes in a warehouse setting. Project Overview Our solution proposed a gantry-based system designed to perform pick, place, and sorting operations for various box sizes. The system aimed to maximize volume occupancy inside a larger container using a vision system to determine the size and orientation of the boxes. Development Process Design and Research: We refined our gantry robot design through consultations with professors and industry experts. Manufacturing: After finalizing the design, we employed different manufacturing techniques to produce the parts. Despite the challenges posed by the pandemic, we successfully fabricated and assembled the robot. Achievements Despite the obstacles, we secured the Second Category Prize and ranked in the Top 40 globally. Project Proposal: Click here to view our work Working Video: Check out the video here

Jul 23, 2021

e-Yantra Robotics Competition (2020) Project: Autonomous Drone for Parcel Delivery This was a national-level e-Yantra Robotics Competition, where we were challenged to develop a drone capable of delivering parcels across a city—a fascinating and complex problem. Project Overview We explored drone control and navigation through simulations, focusing on improving efficiency and safety for urban deliveries. Utilizing ROS (Robot Operating System) and the Gazebo simulator, I integrated algorithms to tackle issues like drone crashes and delivery speed optimization. Development Process Obstacle Avoidance: Extended and implemented an obstacle avoidance algorithm to navigate the drone safely through urban environments filled with buildings and bridges. Delivery Scheduling: Developed a scheduling algorithm to optimize delivery times, enhancing the drone’s ability to deliver more parcels in less time. Simulation and Testing: Conducted extensive tests in simulated environments to ensure reliable navigation and successful delivery performance. *Achievements Our optimized system significantly outperformed many other teams, placing us in the top 20 out of 350 national competitors. This project was a key learning experience in drone control, real-time problem-solving, and teamwork. Project Code: Click here to view our work

Feb 10, 2021

Moodylyser – Real-time Emotion Detection using Computer Vision Project Overview: Moodylyser is a real-time emotion detection system that uses computer vision and machine learning to identify and analyze human emotions through facial gestures. The project leverages a Convolutional Neural Network (CNN) to extract facial features and detect emotions from a live video feed. The emotions detected include “Anger,” “Disgust,” “Fear,” “Happiness,” “Sadness,” “Surprise,” and “Neutral.” Development Process: Face Detection and Preprocessing: Using OpenCV, the system captures a live video feed and detects the user’s face, activating the emotion recognition algorithm. The video is converted to grayscale and fed into the CNN model after resizing to the required dimensions. Model Architecture: The CNN model was implemented using Keras, with over 1.3 million trainable parameters. The model was trained on the FER2013 dataset to classify facial gestures into corresponding emotions. Techniques such as dropouts, regularization, and kernel constraints were used to enhance the model’s performance. Facial Landmarks Detection: The system also incorporates the dlib library to detect 64 facial landmarks, which are then fed into the CNN to improve emotion detection accuracy.

Jul 10, 2020